Cortex AI

What is Cortex AI

Cortex AI is Snowflake’s native AI and machine learning layer.

The service lets teams run large language models, ML inference, and data analysis directly inside Snowflake, without moving data to external AI platforms. Cortex AI operates on warehouse compute and keeps data inside the Snowflake security boundary.

What Cortex AI includes

Cortex AI bundles several AI capabilities under one Snowflake-managed layer.

The core components focus on text, reasoning, and model execution against data stored in Snowflake.

Large language models (LLMs)

Cortex AI provides access to hosted LLMs inside Snowflake.

Teams use these models for summarization, classification, text generation, translation, and semantic search, all through SQL and Snowflake APIs.

Snowflake manages model hosting, scaling, and isolation.

SQL-native AI functions

Cortex AI exposes AI features as SQL functions.

Engineers and analysts call models directly in queries, views, or pipelines, without custom services or external APIs. The workflow stays inside the warehouse.

Data grounding and context

Models run against Snowflake data.

Teams pass tables, documents, or query results as context, which reduces hallucinations and keeps outputs tied to real warehouse data.

How Cortex AI works

Cortex AI runs as a managed service within Snowflake.

Queries that invoke Cortex functions consume Snowflake compute and, in some cases, additional service credits. Execution follows Snowflake’s governance, access controls, and logging.

The architecture removes the need for data extraction, vector databases outside Snowflake, or separate ML infrastructure.

Common Cortex AI use cases

Text analysis on warehouse data

Teams summarize support tickets, classify logs, or analyze survey responses stored in Snowflake tables.

The work happens through SQL, scheduled jobs, or dbt models.

AI-assisted analytics

Analysts generate explanations, annotations, or natural language summaries for dashboards and reports.

Cortex AI supports embedding AI outputs directly into analytical workflows.

Semantic search and retrieval

Cortex AI enables search across unstructured data such as documents, notes, and text fields stored in Snowflake.

Search runs close to the data, without syncing content to external systems.

Prototyping AI features

Product and data teams test AI-driven features using warehouse data.

Cortex AI shortens experimentation cycles by avoiding infrastructure setup and data movement.

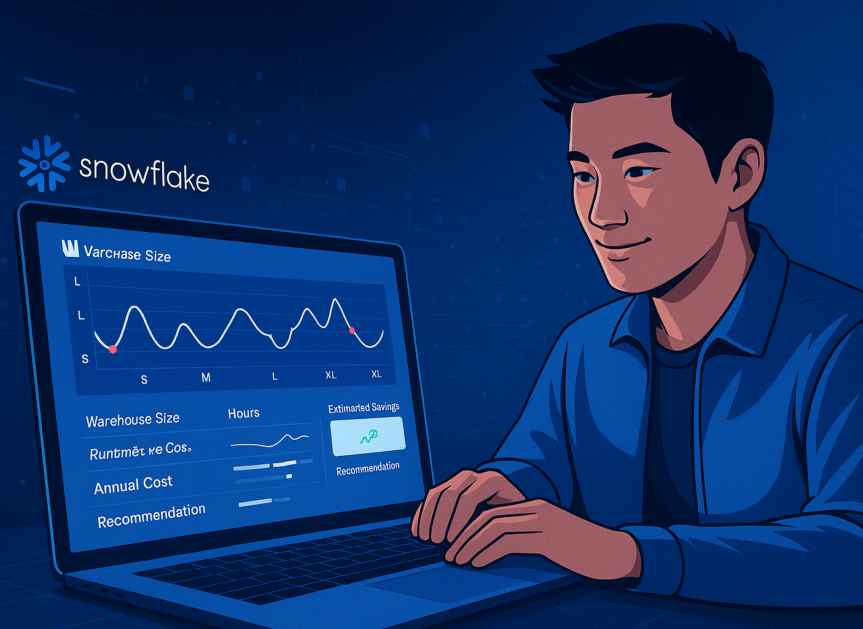

Cost considerations with Cortex AI

Cortex AI usage ties directly to Snowflake consumption.

Queries that invoke AI models consume warehouse credits and, depending on the feature, additional Snowflake-managed service costs. Poorly scoped queries or high-frequency calls can create unexpected spend.

Teams often struggle to answer which dashboards, jobs, or users drive Cortex-related costs.

Governance and security

Cortex AI inherits Snowflake’s security model.

Access controls, role-based permissions, and audit logs apply to AI queries the same way they apply to standard SQL workloads. Data stays inside Snowflake’s environment.

Cortex AI limitations

Cortex AI focuses on Snowflake-native workflows.

Teams with heavy external model training, custom architectures, or multi-cloud AI pipelines may still rely on separate ML platforms. Query-based AI also requires careful cost control and usage monitoring.

How SeemoreData helps manage Cortex AI usage

SeemoreData tracks Cortex AI queries at the warehouse level.

The platform connects AI usage to specific users, dashboards, and pipelines, so teams see where AI-driven spend originates and which assets consume it.

Lineage and cost attribution help teams control Cortex-related costs before they escalate. Learn about The Hidden Cost of Snowflake Cortex AI.

Key takeaways

Cortex AI brings AI execution directly into Snowflake.

The approach simplifies architecture, reduces data movement, and speeds up AI adoption. But AI queries still consume real warehouse resources, which makes visibility and cost control essential.