Stop paying warehouse minutes for metadata seconds!

TL;DR

A customer was burning about $30 000 per year keeping a medium warehouse up 24 × 7. Its only job? Running ten metadata‑refresh queries (one per Iceberg table) every minute. Each query took milliseconds, yet Snowflake billed the warehouse for the full minute slice.

By moving those refreshes to Snowflake Iceberg AUTO_REFRESH (a Snowpipe micro‑service), we eliminated 80 % of the warehouse compute. Snowpipe’s incremental cost was a rounding error.

1. The Invisible Leak

- 10 tasks scheduled every minute (CRON * * * * *).

- Each ran ALTER TABLE … REFRESH on an Iceberg table.

- QUERY_ATTRIBUTION_HISTORY showed micro‑costs → looked cheap.

- But Snowflake bills warehouses in whole‑minute chunks, so the idle 59 s still cost credits.

Result: a trivial workload masking a costly always‑on warehouse.

2. Spotting the Pattern

Plotting WAREHOUSE_METERING_HISTORY revealed a perfect saw‑tooth of resumes and one‑minute runtimes. Overlaying dollar spend made the leak obvious (and painful to see).

3. The Fix We Implemented (One Line!)

Snowflake can keep Iceberg metadata in sync with AWS Glue without a warehouse. Create a catalog integration and let Snowpipe do the polling:

CREATE CATALOG INTEGRATION auto_refresh_catalog_integration

CATALOG_SOURCE = GLUE

CATALOG_NAMESPACE = ‘my_catalog_namespace’ — Glue DB

TABLE_FORMAT = ICEBERG

GLUE_AWS_ROLE_ARN = ‘arn:aws:iam::123456789123:role/my-catalog-role’

GLUE_CATALOG_ID = ‘123456789123’

ENABLED = TRUE

REFRESH_INTERVAL_SECONDS = 60; — sync every minute

Then suspend or drop the old cron‑style tasks.

4. Impact in Real Dollars

| Before | After |

| Warehouse credits / day → ~$82 | ~$16 |

| Snowpipe credits / day → n/a | ~$0.40 |

| Annual spend ≈ $30 K → $6 K | Savings ≈ $24 K |

(Rounded; your mileage may vary.)

5. Monitoring & Verification

— Health check

SELECT SYSTEM$AUTO_REFRESH_STATUS(‘analytics.event_log’);

— Cost attribution

SELECT *

FROM SNOWFLAKE.ACCOUNT_USAGE.PIPE_USAGE_HISTORY

WHERE PIPE_NAME ILIKE ‘%AUTO_REFRESH%’

AND START_TIME >= DATEADD(day, -7, CURRENT_DATE);

Graph PIPE_USAGE_HISTORY vs. WAREHOUSE_METERING_HISTORY to watch the savings compound.

6. Caveats & Unsupported Scenarios

Row‑Level Deletes

AUTO_REFRESH currently does not support Iceberg tables that rely on position‑delete files. You’ll get:

external catalog with row‑level deletes is not supported

Until Snowflake removes this limitation:

- No materialized views, search optimization, or streams on those Iceberg tables.

- Dynamic tables can’t refresh snapshots that include position deletes.

Refresh Frequency vs. Latency

REFRESH_INTERVAL_SECONDS controls freshness. Lower values → faster Glue → Snowflake lag but marginally higher Snowpipe cost. We chose 60 s as the sweet spot.

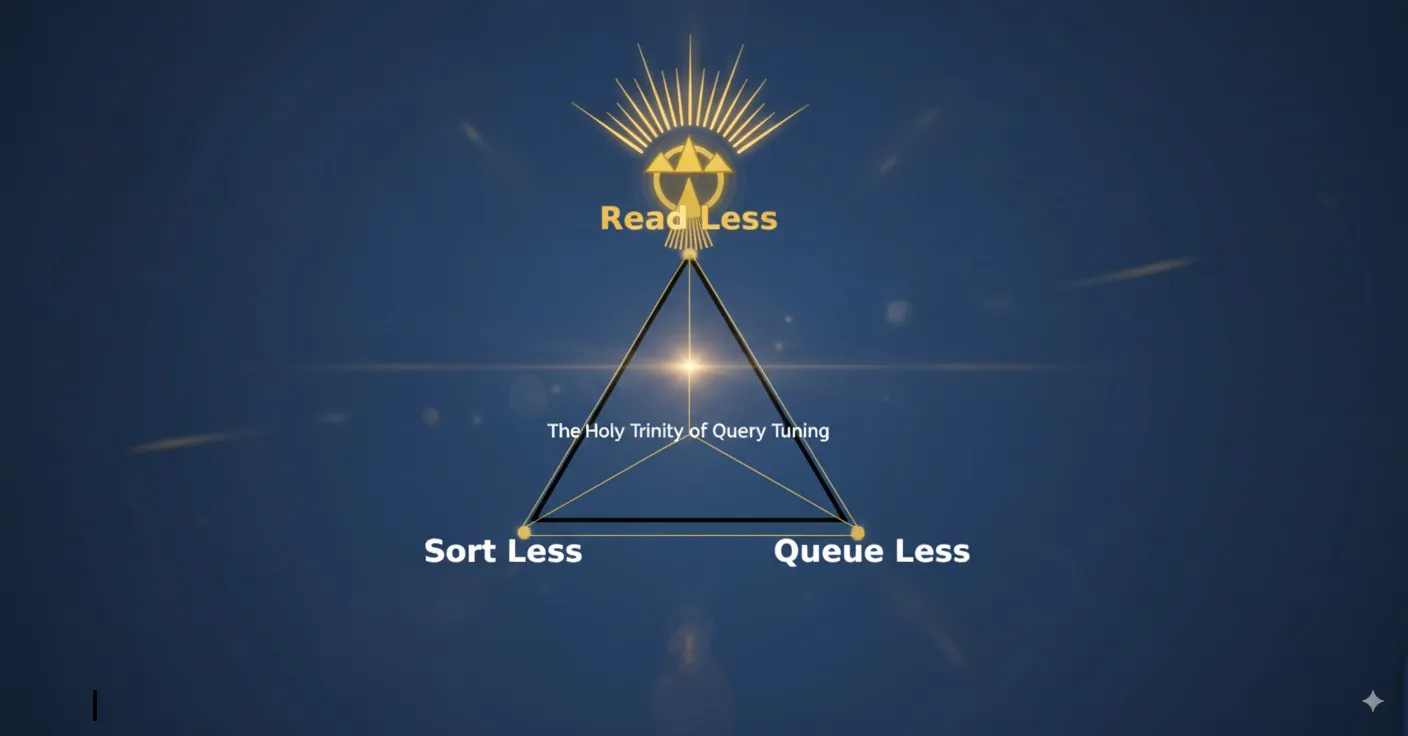

7. Key Take‑Aways

- Idle time hides in plain sight, look at warehouse uptime, not just query cost.

- Frequent, tiny tasks are prime candidates for Snowpipe Auto‑Refresh.

- One configuration change can wipe out five‑figure waste.

- Monitor everything: SYSTEM$AUTO_REFRESH_STATUS + PIPE_USAGE_HISTORY.

- Re‑invest the saved dollars in innovation.

Need Help Slashing Your Snowflake Spend? Let’s Talk

References