Cloud data warehouses have transformed how teams approach analytics, powering everything from real-time dashboards to machine learning pipelines. Their ability to scale on demand and abstract away infrastructure management has made them the backbone of modern data stacks.

But with that flexibility comes a new set of challenges, especially when it comes to cost. Pricing models vary widely across platforms, and usage-based billing means that even small inefficiencies can add up fast. Teams often discover too late that their warehouses are oversized, under-optimized, or quietly racking up charges through long-running queries, unused compute, or inefficient pipelines.

For data engineers responsible for building scalable, efficient systems, understanding the real drivers of data warehouse cost is no longer optional. It’s fundamental.

This guide breaks down the major cost components, offers frameworks for accurate estimation and platform comparison, and walks through practical, field-tested strategies to help you cut waste and maximize the value of every dollar spent.

Breaking Down Data Warehouse Cost Components

Before you can reduce spend, you need a firm grasp on what you’re paying for. While pricing structures vary by vendor, most cloud data warehouses break costs down across several dimensions: compute, storage, data movement, concurrency, and support.

Compute

Compute typically represents the largest portion of warehouse spend, and understanding how each provider handles it is crucial to managing cost effectively. Each vendor takes a different approach to pricing:

- Snowflake charges per-second for virtual warehouse time, with options for autosuspend and multi-cluster scaling.

- BigQuery bills per query (on-demand) or offers flat-rate capacity pricing.

- Amazon Redshift uses node-based pricing, where instance size and count directly affect cost.

Frequent queries, complex data transformations, or workloads with high concurrency can drive costs up quickly if not carefully optimized and monitored. Even subtle inefficiencies in warehouse usage can lead to significant cost overruns over time.

Storage

Storage is more straightforward, but still variable depending on how your cloud data infrastructure is designed:

- Active storage is for frequently queried datasets and usually priced per terabyte per month.

- Long-term storage may offer lower rates for data that is rarely accessed.

Some platforms automatically migrate cold data to lower-cost storage tiers. Others require manual setup of archival policies and table TTLs.

Data Movement

Data movement often flies under the radar. Costs can arise from:

- Ingest: Pulling large volumes from streaming systems like Kafka or cloud object storage.

- Egress: Moving data out of the warehouse, especially across cloud regions.

- Replication: Maintaining high-availability systems or analytics in multiple geographies.

If your data pipeline optimization includes a lot of cross-region transfers or hybrid deployments, these charges can add up fast.

Concurrency and Scaling

Scaling mechanisms differ between vendors. While concurrency scaling improves performance under load, it can quietly increase costs:

- BigQuery uses reservation slots.

- Snowflake adds compute clusters.

- Redshift launches concurrency scaling clusters.

Left unmonitored, these features can inflate your monthly bill significantly.

Licensing and Support

Premium support tiers, access to SLAs, and additional vendor features like governance or observability often come at extra cost. Integrations with platforms like dbt Cloud or Monte Carlo may also introduce recurring charges.

The underlying data warehouse architecture—how compute, storage, and ingestion layers are set up—has a direct influence on how much you’ll pay and where optimizations can happen.

Factors Influencing Data Warehouse Cost Estimation

Cost estimation in cloud data warehousing is rarely a one-line formula. Accurate forecasting requires accounting for both technical and organizational realities.

Start with these key variables:

- Data Volume Growth: Monitor ingestion rates to project future storage needs. Raw data plus intermediate tables and failed job logs can compound quickly.

- Query Frequency and Complexity: Dashboards, ad hoc queries, and scheduled jobs each contribute to usage patterns. More joins, scans, or transformations mean higher compute time.

- Concurrency Requirements: Team growth or increased business reporting can demand more simultaneous resources.

- Pipeline Design: A real-time pipeline using tools like Kafka or Spark Structured Streaming is more resource-intensive than batched Airflow or dbt runs.

- Multiple Environments: Many teams mirror data environments across dev, staging, and production. Without automated cleanup or access controls, duplicate resources can idle unused.

- Platform-Specific Behaviors: Vendors differ in how they scale resources, cache queries, and allocate workloads. Misunderstanding these can lead to overprovisioning or underutilization.

Robust data warehouse cost estimation models should be tied to metrics pulled from your warehouse monitoring tools. Combine real usage data with growth forecasts to produce more accurate projections.

How to Perform a Data Warehouse Cost Comparison

Choosing a warehouse provider requires more than just feature matching. Conducting a structured data warehouse cost comparison is essential before any long-term commitment.

Start with the basics:

| Feature | Snowflake | BigQuery | Redshift |

| Compute | Pay-per-second | On-demand or flat-rate | Instance-based (RA3 or DC2) |

| Storage | Tiered, compressed | Auto-managed, long-term discounts | Requires compression, manual tuning |

| Concurrency Scaling | Multi-cluster, autoscaling | Slot-based with limits | Adds temporary clusters |

| Cost Controls | Auto-suspend, credit monitors | Flat-rate reservations available | Reserved instances, monitoring |

It is important to consider the hidden nuances behind these pricing models:

- Snowflake bills compute only while running, but frequent resume events can inflate usage.

- BigQuery charges per query unless using flat-rate, so inefficient SQL can lead to high costs.

- Redshift has a more traditional model, requiring reservations to lock in savings.

Consider how your actual workload behaves. A team running frequent, complex queries on 10+ dashboards per hour might benefit from BigQuery’s slot-based model. Another team running long batch jobs may find Snowflake more cost-efficient through auto-suspending smaller warehouses.

As an example, one team migrated a 2 TB analytics workload from Redshift to Snowflake. By enabling auto-suspend on their dev and test environments and applying column pruning in key queries, they cut compute costs by nearly 40% in the first quarter after switching.

To make the best choice, combine billing simulation tools, vendor calculators, and internal benchmarks against representative workloads.

Strategies to Cut Data Warehouse Costs

Once you understand where the costs originate, you can apply focused strategies to reduce spend across compute, storage, and operations. Below are six practical tactics to implement immediately.

Optimize SQL Queries

Poorly written SQL is the most common source of wasted compute. Remove SELECT *, filter early, avoid unnecessary joins, and use CTEs efficiently. Take advantage of caching features like Snowflake’s result reuse or BigQuery’s materialized views.

Partition and Cluster Tables

Segmenting large datasets reduces scan costs and improves performance. Partitioning by date or region is common, and clustering on high-cardinality fields can reduce scanned data significantly.

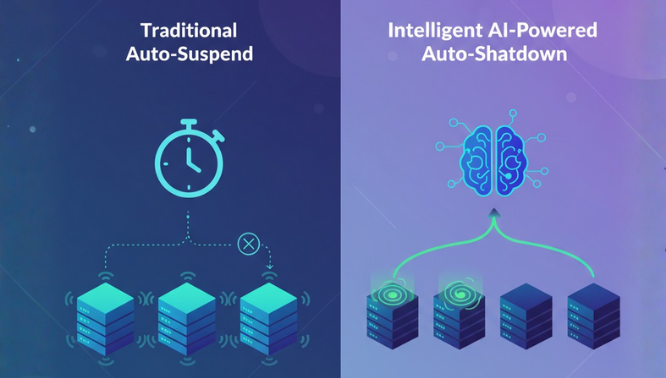

Right-Size and Auto-Suspend Warehouses

For warehouses like Snowflake and Redshift, monitor actual usage and adjust sizing accordingly. Use auto-suspend with short idle thresholds to avoid paying for unused compute. In multi-environment setups, dev and staging often run far longer than necessary.

Apply TTL and Archival Policies

Older data that no longer needs fast access can be archived to cheaper storage or deleted entirely. Use table-level TTLs or lifecycle policies where supported. This is a key part of long-term ETL performance tuning and keeping your footprint lean.

Eliminate Unused Resources

Review your warehouse for idle dashboards, expired datasets, and old test environments. Delete unused users and restrict creation of large compute resources without review. Audit storage to remove unnecessary versions or duplicates.

Leverage Cost Monitoring Tools

Visualization tools like Looker or Metabase can be used to track usage patterns. Combine them with alerts or reports from native billing APIs to monitor trends and identify anomalies. Dedicated cost monitoring tools such as Finout, CloudZero, or in-house dashboards give your team the visibility needed to stay proactive.

Many of these tactics tie back to how your data warehouse architecture is structured. Streamlining how data is ingested, modeled, and queried ensures sustainable cost performance at scale.

How Seemore Supports Smarter Warehouse Cost Management

Once your cost strategy is in place, the next challenge is keeping it on track. Seemore gives data teams real-time visibility into warehouse behavior, so you can spot inefficiencies before they turn into expensive surprises.

The new Warehouse Optimization feature for Snowflake identifies underutilized compute, scaling missteps, and query performance bottlenecks. With a single dashboard, teams can right-size warehouses, refine autosuspend settings, and align scaling policies with real usage patterns.

It’s not just about cutting back. Seemore highlights when scaling up may actually save costs by reducing queueing and spillage, turning performance improvements into cost wins.

Whether you’re refining your warehouse setup or scaling fast, Seemore gives you the insight to optimize confidently and continuously.

Optimize the Data Product, Not Just the Platform

One of the most overlooked sources of warehouse cost is the data product itself. Dashboards, models, and pipelines that no one uses continue to consume compute, storage, and engineering time, even if they’re technically “working.”

Seemore goes beyond infrastructure monitoring. It tracks which assets are being queried, by whom, and how often. This gives teams the visibility to identify dashboards that aren’t used, models that run daily but drive no decisions, and pipelines that feed dead-end datasets.

Instead of just tuning SQL or right-sizing compute, Seemore helps you answer the more strategic question: Should we even be running this?

By aligning warehouse cost with product usage, teams can cut waste while improving the quality and relevance of what they deliver. That’s how you move from cost control to true data efficiency.

Building a Cost-Efficient Warehouse That Scales with You

Cloud data warehouses offer unmatched agility, but that agility comes with a price. Literally. Without clear visibility into usage and cost drivers, even well-architected environments can become expensive over time.

The key is treating cost control as an ongoing practice, not a one-time fix. By understanding the core components of data warehouse cost, estimating realistically, and applying consistent optimization strategies, you can keep performance high and spending predictable.

Tools like Seemore make that process easier, helping you monitor, adjust, and scale with confidence as your data platform grows.

Looking to get ahead of your next billing cycle? Book a Seemore demo and take control of your warehouse costs today.